When you do a count() on the result RDD, we will get 6 which is more than the number of elements in the initial RDD. flatMap() took care of “flattening” the output to plain Strings from Array Here with flatMap() we can see that the result RDD is not Array] as with map() it is Array. Res1: Array = Array(Hadoop, In, Real, World, Big, Data) Let’s try the same split() operation with flatMap() rdd.flatMap(_.split(" ")).collect scala> rdd.map(_.split(" ")).countįlatMap() transforms an RDD with N elements to an RDD with potentially more than N elements. Which is same as the count of the initial RDD before the split() transformation. Since Scala and its libraries are all open source though, you can go and see how it is implemented for the various collections in the API. Each Array has a list of words from the initial String. Answer: The way you would implement flatMap inevitably depends a lot on the data structure you are implementing it for. The result RDD also has 2 elements but now the type of the element in an Array and not a String as the initial RDD. Res0: Array] = Array(Array(Hadoop, In, Real, World), Array(Big, Data)) Split() function on this RDD, breaks the lines into words when it sees a space in between the words. Important thing to note is each element is transformed into another element there by the resultant RDD will have the same elements as before. map() transforms and RDD with N elements to RDD with N elements. The above rdd has 2 elements of type String.

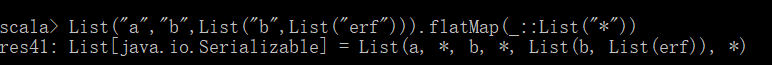

Rdd: .RDD = ParallelCollectionRDD at parallelize at :27 1 2 3 4 5 6 7 R sparkContext. Let us look at the example for understanding the difference in detail. In this function, we can return multiple lists of elements. scala> val rdd = sc.parallelize(Seq("Hadoop In Real World", "Big Data")) The flatMap () is just the same as the map (), it is used to return a new RDD by applying a function to each element of the RDD, but the output is flattened. When applied on RDD, map and flatMap transform each element inside the rdd to something.Ĭonsider this simple RDD. The first tag contributes one element to this Array (a link) each of the remaining tags contributes two elements (comma and link).Both map and flatMap functions are transformation functions. flatMap is a combination of map and flatten, so it first runs map on the sequence, then runs flatten, giving the result shown. This trick is explained in another blog post.ĭue to flatMap(), TagList is rendered as a single flat Array. Because flatMap treats a String as a sequence of Char, it flattens the resulting list of strings into a sequence of characters ( Seq Char ). In line A, we are conditionally inserting the Array element ', ' via the spread operator (. This is an implementation of flatMap(): function flatMap( arr, mapFunc) >

With flatMap(), each input Array element is translated to zero or more output elements.With map(), each input Array element is translated to exactly one output element.flatMap() #īoth map() and flatMap() take a function f as a parameter that controls how an input Array is translated to an output Array: In this blog post, we look at the operation flatMap, which is similar to the Array method map(), but more versatile. ShuffledAggregatedRDD71f027b8 Here, we combined the flatMap. flat() is equivalent to the function flatten() described in this blog post. for the Spark Platform and API and not much different from the Java/Scala versions. flatMap() is equivalent to the function flatMap() described in this blog post. In our previous posts we talked about the groupByKey, map and flatMap.

#Flatmap scala update#

Update : The following new Array methods were just added to ECMAScript 2019 (based on a proposal by Michael Ficarra, Brian Terlson, Mathias Bynens): The fundamental concept of functional Scala is functions act as first-class.

0 kommentar(er)

0 kommentar(er)